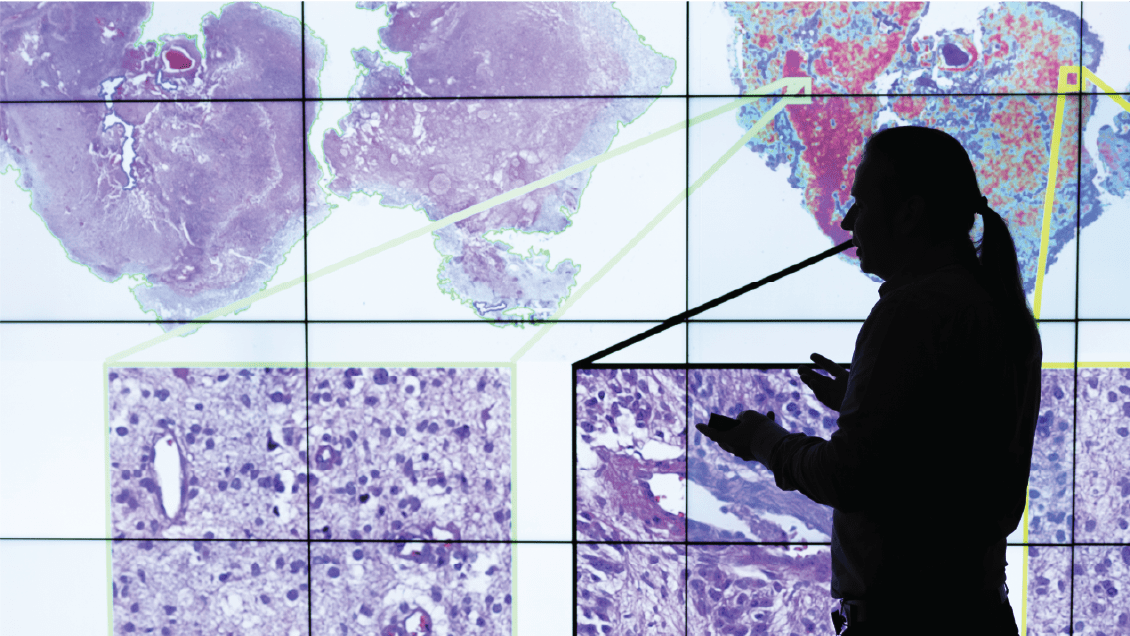

To build AI programs, humans must label a lot of data in great detail. Often, the process isn’t uniformly supervised. At the School of Medicine, physicians, nurses and researchers do the work — and their input can vary as much as a research paper does from a social media post.

And AI creates shortcuts — sometimes helpful ones, sometimes not. Purkayastha's lab found AI models can create shortcuts — based on biases in labeling data — to identify a patient’s race, even when it’s irrelevant. His lab also found that an AI program can use physical aspects of an X-ray, such as pixel intensity, to infer a patient’s race, gender and age with alarming accuracy.

“Something within the imaging process or the medical physics of capturing those images acts as a hidden signal,” he said. “How that’s done is fascinating. But it’s not (fascinating) when an AI model uses that feature to decide which patient needs something more thorough, like a PET scan, right?”

Not all shortcuts are bad; some can speed up processing. “The only way to differentiate between good and bad is when we add our human social understanding of bias,” he said.

Purkayastha said we should be able to explain such results, but he notes that there are common drugs people take every day that work, even though we don’t always know why. Accuracy and fairness in the models must be a priority, he said, even if explainability comes at the expense of performance.

“What’s most important is not causing harm. We need a setup for AI models to test them in the field, gain FDA approval, and then see in deployment that they don’t cause harm,” he said.

One key in reducing bias, Purkayastha says, is ensuring diversity in the pool of people creating the algorithms.

“If you have a diverse group of thinkers in your team and everybody has a chance to speak or contribute, many of the problems we’re discussing get solved,” Purkayastha said. “Model biases reflect the lack of diversity in the thinking process.”

“The technology you build embeds the philosophies that you hold — whether you know it or not.”